This is the 1960s Civil Rights Leader Who Fani Willis’ Father Called a ‘Cracker’

The father of Atlanta District Attorney Fani Willis was caught in a recorded interview making several racist comments about a white politician, according to a […]

The father of Atlanta District Attorney Fani Willis was caught in a recorded interview making several racist comments about a white politician, according to a […]

Tony Bennett, who died on July 21 at the age of 96, is known for his 1962 hit “I Left My Heart in San Francisco,” […]

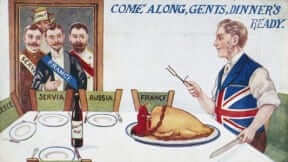

The Treaty of Versailles was signed in Versailles, France, on June 28, 1919. Neither the winners nor the losers of World War I were happy […]

It’s been 50 years since we first landed on the moon. It’s been 46 years, seven months, and four days since we last departed from […]

In 1962, President John F. Kennedy committed the United States to put an American on the moon and return him safely to Earth by the […]

The summer season has ripped off the thin scab that covered an American wound, revealing a festering disagreement about the nature and origins of the […]

In an excellent essay making the case that pop music was at its zenith in 1984, Julie Kelly writes that the era represented a patriotic […]

Why didn’t Sir Isaac Newton, the famed English mathematician, physicist, and astronomer, invent the automobile? Newton was a clever guy. He came up with calculus, […]

When I was a boy, everyone said the epitome of Shakespeare is Hamlet’s soliloquy. The soliloquy, the one from Act III, the one that poses […]

Many observers were quick to correct Colin Kaepernick’s recent selective quoting from Frederick Douglass’s speech, “What to the Slave is the Fourth of July?” They […]

Colin Kaepernick is woke. He wears pig socks to protest cops, whom he reckons are modern day slave catchers, and even cosplays as Angela Davis. […]

No one would mistake the Supreme Court’s liberal justices for adherents to the concept of “originalism,” or the belief that one should consider—first and foremost—the […]

The following is an excerpt from Calvin Coolidge’s (lengthy) speech in Philadelphia on July 5, 1926, marking the 150th anniversary of the Declaration of Independence. […]

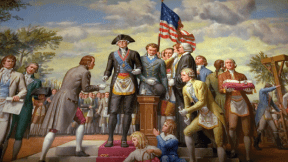

The colonists’ quest for independence from the British in 1776 began with a goal: “to assume among the powers of the earth, the separate and equal […]

Nearly 1,900 years ago, Roman Emperor Hadrian built a great wall across Britain. It was 73 miles long, and divided the Roman province of Britannia […]

James Madison is justly celebrated for his frequently stated opinion that “all power in just and free Government is derived from compact.” But Madison’s view […]