Proponents of renewable energy claim that wind and solar energy is now cheaper than fossil fuels. According to USA Today, “Renewables close in on fossil fuels, challenging on price.” A Forbes headline agrees: “Renewable Energy Will Be Consistently Cheaper Than Fossil Fuels.” The “expert” websites agree: “Renewable Electricity Levelized Cost Of Energy Already Cheaper,” asserts “energyinnovation.org.”

They’re all wrong. Renewable energy is getting cheaper every year, but it is a long way from competing with natural gas, coal, or even nuclear power, if nuclear power weren’t drowning in lawsuits and regulatory obstructions.

With both wind and solar energy, the cost not only of the solar panels and wind turbines has to be accounted for, but also of inverters, grid upgrades, and storage assets necessary to balance out the intermittent power.

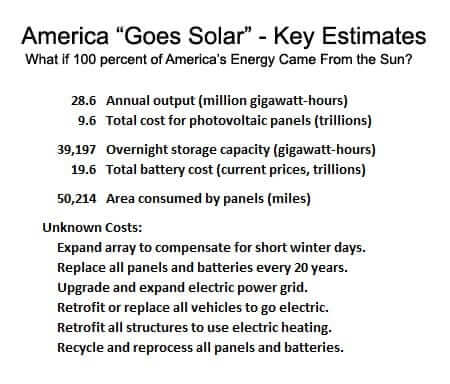

Taking all variables into account, what might it cost for the entire U.S. to get 100 percent of its energy from solar energy?

Speaking the Language of Energy and Electricity

According to the U.S. Energy Information Administration, the United States in 2017 consumed 97.7 quadrillion BTUs of energy. BTUs, or British Thermal Units, are often used by economists to measure energy. One BTU is the energy required to heat one pound of water by one degree fahrenheit.

If we’re going understand what it takes to go solar, and usher in the great all-electric age where our heating and our vehicles are all part of the great green grid, then we have to convert BTUs into watts. That’s easy. One kilowatt-hour is equal to 3,412 BTUs. Following the math, one quadrillion BTUs is equal to 293,071 gigawatt-hours. Accordingly, 97.7 quadrillion BTUs is equal to 28.6 million gigawatt-hours. So how much would it cost for a solar energy infrastructure capable of delivering to America 28.6 million gigawatt-hours per year?

Solar panels are sold by the watt; residential systems are typically sized by kilowatt output, and large commercial solar “farms” are typically measured in megawatt output. A gigawatt is a billion watts. So generating 28.6 million gigawatt-hours in one year requires a lot of solar panels. How many?

To properly scope a solar system capable of generating 28.6 million gigawatt-hours per year, you have to take into account the “yield” of the system. A photovoltaic solar panel only generates electricity when the sun is shining. If you assume the “full sun equivalent” hours of solar production are eight hours per day (solar panels don’t generate nearly as much power when the sun is not directly overhead), then you can assume that in a year these panels will generate power for 2,922 hours. Since 28.6 million gigawatt-hours is equivalent to 28.6 quadrillion watt-hours, dividing that by 2,922 means you need a system capable of generating 9.8 trillion watts in full sun. How much will that cost?

A best case total cost for that much solar photovoltaic capacity would have to be at least $1.00 per watt. Because the labor and substrate costs to install photovoltaic solar panels have already fallen dramatically, it is unlikely to expect the cost per watt to ever drop under $1. Currently costs for large commercial systems are still just under $2 per watt. So the cost for solar panels to power the entire energy requirements of the United States would be at least $10 trillion.

But wait. There’s more. Much more.

“Getting Cheaper All the Time”? Yes, But . . .

Renewable energy boosters use what’s called the “levelized lifetime cost” to evaluate how much wind and solar energy cost compared to what natural gas or nuclear power costs. To do this, they take the installation costs, plus the lifetime operating costs, and divide that by the lifetime electricity production. On this basis, they come up with an average cost per kilowatt-hour, and when they do this, renewables look pretty good.

What this type of analysis ignores are the many additional, and very costly, adaptations necessary to deliver renewable power. The biggest one is storage, which is breezily dismissed in most accounts as “getting cheaper all the time.” But while storage is getting cheaper, it’s still spectacularly expensive.

The only way intermittent renewable energy can function is by either having “peaking plants,” usually burning natural gas, spin into production whenever the wind falters or the sun goes behind a cloud. To achieve 100 percent renewable energy, of course, these peaking plants have to be decommissioned and replaced by giant batteries.

An example of this is in Moss Landing, on the Central California Coast, where a natural gas peaker plant is being decommissioned and replaced by a battery farm that will store an impressive 2.2 gigawatt-hours of electricity. Not impressive is the fact that to-date, the installation cost for this massive undertaking has not been disclosed, despite that all these costs will be passed on to captive consumers. It is possible, however, to speculate as to the cost.

The current market price for grid scale electricity storage, based on credible analysis reported in, among other sources, Greentech Media and the New York Times is between $300 and $400 per kilowatt-hour. The installation cost for a 2.2 gigawatt-hour system would, on this basis, would cost between $660 million and $880 million. Chances are that PG&E will spend more than that, since the “balance of plant” including inverters, utility interties, and site preparation and support facilities will all be part of the capital costs. But using rough numbers, a capital cost of $500 million per gigawatt hour is not unreasonable. It might be optimistic for today, but battery costs do continue to decline, which may offset other costs that may be understated.

So based on a price of $500 million per gigawatt-hour of storage, how much money would it cost to deploy energy storage, and how much would that add to the cost of electricity?

Why Can’t We Just Use Batteries?

As noted, in 2017, if all energy consumed in the United States had taken the form of electricity, it would have been equal to 28.6 million gigawatt-hours. That comes out to 78,393 gigawatt-hours per day. But each day, it has to be assumed that the solar power is only feeding energy into the grid, at most, about half that time. Batteries are necessary to capture that intermittent power and deliver it when the sun is down or behind clouds.

It’s easier to make fairly indisputable battery cost estimates by using conservative assumptions. Therefore, assume that solar can supply reliable power 12 hours a day. That’s a stretch, but it means the calculations to follow will be a best case. If the United States is supposed to go completely solar, we would need to install grid scale electricity storage equivalent to 39,197 gigawatt-hours. In this manner, during the 12 hours of daily solar production, half of the output will be being used, and the other half will being stored in batteries. Cost? $19.6 trillion.

That’s a ridiculously huge number, but we’re not finished with this analysis. There’s the pesky problem of changing seasons.

Only So Many Sunny Hours in a Day

Even in sunny California, the difference between sunshine on the winter solstice and the summer solstice is dramatic. In Sacramento, the longest day is 14.4 hours, and the shortest day is 9.2 hours. Because there are far more cloudy days, even during a California winter, compared to a California summer, the difference is solar output in winter is less than half what it is during the summer months. Solar photovoltaic production in December typically only about one-third what it is in June.

As an aside, wind resources are also seasonal. For example, California’s state government has produced an analysis entitled “Visualization of Seasonal Variation in California Wind Generation” that makes this seasonal variation in wind resources clear. Reviewing this data reveals an obvious variation between the months of March through August, when winds are stronger, compared to September through February, when winds are considerably weaker. This seasonal wind variation, unfortunately, overlaps significantly with the seasonal solar variation. The consequences for renewable energy are huge.

Again for the sake of clarity, some broad but conservative assumptions are useful. A best case assessment of this variation would be to estimate the yield of solar and wind assets to be half as productive in the fall and winter as they are in the spring and summer. This means that to achieve a 100 percent renewable portfolio, two difficult choices present themselves. Either the wind and solar capacity has to be expanded to be sufficient even in fall and winter, when there is relatively little sun and wind, or battery capacity has to be expanded so much as to not store energy for half-a-day, each day, but for half-a-year, each year. This is a stupendous challenge.

To compensate for seasonality, supplemental energy storage would require not 12 hours of capacity, to be filled and released every 24 hours, but 180 days of stored capacity, capable of storing summer surplus energy, to be released during fall and winter. Doing that with batteries would cost hundreds of trillions of dollars. It is absolutely impossible. Coping with seasonal variation therefore requires constructing enough solar and wind assets to function even in winter when there’s less sun and less wind, therefore creating ridiculous overcapacity in spring and summer.

The Cost of Going 100 Percent Solar

Even at $1 per watt installed, it would cost at least $10 trillion just to install the photovoltaic panels. Just to store solar energy for nighttime use, using batteries, would cost nearly another $20 trillion, although it is fair to assume that storage costs—unlike the costs for solar panels—will continue to fall.

Building overcapacity, probably in America’s sunny southwest, to deliver solar power through the cold winter would probably require another $10 trillion worth of panels. And to deliver power across the continent, from the sunny Southwest to the frigid Northeast, would require revolutionary upgrades to the national power grid, probably using high-voltage direct current transmission lines, a technology that has yet to be proven at scale. Expect to spend several trillion on grid upgrades.

Then, of course, there’s the cost to retrofit every residential, commercial and industrial space to use electric heating, and the cost to retrofit or replace every car, truck, tractor and other transportation assets to run on 100 percent electric power.

When you’re talking about this many trillions, you’re talking serious money! Figure at least $50 trillion for the whole deal.

Another consideration is the longevity of the equipment. Solar panels begin to degrade after 20 years or so. Inverters, required to convert direct current coming from solar panels into alternating current, rarely last 20 years. Batteries as well have useful lives that rarely exceed 20 years. If America “goes solar,” Americans need to understand that the entire infrastructure would need to be replaced every 20 years.

Not only is this spectacularly expensive, but it brings up the question of recycling and reuse, which are additional questions that solar proponents haven’t fully answered. A solar array large enough to produce nearly 10,000 gigawatts in full sun would occupy about 50,000 square miles. Imagine tearing out that much hardware every two decades. Reprocessing every 20 years a quantity of batteries capable of storing nearly 40,000 gigawatt-hours constitutes an equally unimaginable challenge.

To the extent the United States does not go 100 percent solar, wind is an option. But the costs, infrastructure challenges, space requirements, and reprocessing demands associated with wind power are even more daunting than they are with solar. Americans, for all their wealth, would have an extremely difficult time moving to a wind and solar economy. For people living in colder climates, even in developed nations, it would be an even more daunting task. For people living in still developing nations, it is an unthinkable, cruel option.

The path forward for renewable energy is for utilities to purchase power, from all operators, that is guaranteed 24 hours-a-day, 365 days a year. This is the easiest way to create a level competitive environment. Purveyors of solar power would have to factor into their bids the cost to store energy, or acquire energy from other sources, and their prices would have to include those additional costs. It is extremely misleading to suggest that the lifetime “levelized cost” is only based on how much the solar farm costs. Add the overnight storage costs. Take into account costs to maintain constant deliveries despite interseasonal variations. Account for that. And then compete.

Content created by the Center for American Greatness, Inc. is available without charge to any eligible news publisher that can provide a significant audience. For licensing opportunities for our original content, please contact licensing@centerforamericangreatness.com.

Photo Credit: Robert Nickelsberg/Getty Images